Earlier in the year, 2023 predictions highlighted the increasing modularity and distributed nature of new data center builds. As an industry, we may have begun our data center journey with a concentrated number of massive hyperscale data centers in key population centers, like London, Chicago, Tokyo, Northern Virginia, or Singapore, but increasingly smaller, modular, less power-consuming data centers are being built either as individual entities or as part of multitenant, multi-building campus environments. Instead of consuming 50-150 MW, modular, distributed data centers may occupy a fraction of what hyperscale data centers so in terms of space and power, which makes them easier and faster to build by utilizing smaller parcels of land and distributing their impact on local power grids. Modular, distributed data centers may also deliver increased diversity and reduced latency when located closer to the end users.

As I bike around the Chicago suburbs near my home or travel throughout Southeast Asia as I did in July, I can see firsthand the evolving data center landscape. In Aurora, Illinois, on the western edge of Chicagoland, a 65-acre site is being developed to host a campus with three data centers — right across the highway from a massive CyrusOne site that is expanding for the second time. In Singapore, with over 70 existing data centers consuming more than 7% of the country’s electricity, the government placed a three-year pause on new data center construction in 2019. Although the moratorium is now lifting, concerns about sustainability and environmental impact are driving the entire data center sector toward increased energy efficiency and reduced carbon emissions. The main beneficiary of this moratorium has been the adjacent Malaysian state of Johor, where new data centers are being built.

For example, Equinix recently announced it intends to open a new data center in the first half of 2024 in Nusajaya Tech Park (NTP), about 15 miles from Singapore. Additional Johor data centers are under construction or being planned.

But data centers do not exist in isolation. They require connectivity — high-speed optical connectivity — to bind them to other data centers and the people and applications that utilize their compute and storage resources, including rapidly growing AI/ML applications, like ChatGPT. This connectivity can be local, regional, or — in the case of those Malaysian data centers — trans-oceanic subsea cables that cross the Straits of Johor and terminate in Singapore. How can we evolve and adapt data center interconnect (DCI) solutions to support the growing capacity demands of expanding hyperscale data centers while also supporting the diversity of smaller, modular, and distributed ones? The answer is threefold: more compact modular optical platform choices, innovations in embedded and pluggable coherent optical engines, and increased transmission spectrum per fiber pair. In short, we need to right-size initial DCI capacity and investment to match the data center’s day-one transmission demands while also enabling cost and energy-efficient expansion over time.

A chassis to fit every environment

The DCI category of optical products began in 2015 with the debut of small, rapidly deployable server-like transponders with coherent optical engines. Since then, the industry has moved toward compact modular platforms with sleds that can be mixed and matched to support the desired functionality, as seen in Figure 1. Additional chassis options now include ones supporting 600-mm, 450-mm, and even 300-mm depths for “access like” deployments to cover a broad range of physical space requirements and data center environments. Although individual chassis are one or two rack units (RU) in height and support one or two rows of sleds, they can now be extended with multi-chassis connectivity that enables easy expansion while also being managed and operationalized as a single network element. This enables DCI operators to start with a 1- or 2-RU chassis and expand it as needed, matching cost to capacity. The latest compact modular platforms are also designed to support mixing and matching of both optical line system and transponder sleds. This approach is key to minimizing costs in smaller DCI deployments — enabling both line system and transponder functions to be combined into a single chassis instead of multiple units required with a dedicated per-function design approach.

Moving at the speed of light

With increased vertical integration, leading coherent optical engines are evolving in two directions simultaneously: smaller, lower-power pluggables that can reach up to 1,000 km or more, and embedded, sled-based optical engines with sophisticated transmission and reception technology that maximize capacity-reach and spectral efficiency.

Today’s 400G pluggable coherent transceivers fit into CFP2 and QSFP-DD packages and run at 15 to 25 watts. With increasing capabilities in the smaller QSFP-DD package, IP over DWDM (IPoDWDM) is starting to be realized with deployment directly into routers and switches. A wide variety of 400G coherent transceivers are available, including 400ZR, which has fixed settings, supports Ethernet-only traffic, and is designed to support DCI applications up to 120 km. 400G ZR+ is an umbrella term for 400G pluggables with more advanced functionality than ZR, including increased programmability, support for OTN and Ethernet traffic, and better optical performance to support metro-regional and some long-distance connectivity.

There are also 400G XR pluggables available that support both high-performance point-to-point applications, like ZR+, and point-to-multipoint deployments via digital subcarrier technology, where a single 400G pluggable can communicate simultaneously with multiple lower-speed (25 Gb/s or above) pluggables. This advanced architecture can deliver significant cost savings by reducing the number of optical transceivers by almost 50% and eliminating some intermediate aggregation switches and routers. With their small size and significantly reduced power per bit (less than 10% of the 100G coherent engines of 10 years ago), coherent pluggables help achieve sustainability goals and are an economic fit for data center interconnect distances below 1,000 km and where fiber is readily available.

This brings us to embedded optical engines. Today’s leading engines deliver 800 Gb/s per wavelength at distances approaching 1,000 km. The same 800G engines can deliver 600 Gb/s up to 3,000 km and 400 Gb/s almost everywhere in the world, including the Asia-Pacific (APAC) subsea region at 10,000 km. Additional developments are underway to deliver 1.2 Tb/s per wavelength and beyond. Due to their high capacity reach, embedded optical engines are ideal for long-distance DCI connectivity solutions, including across continents or oceans with subsea connectivity. Embedded engines are also ideal where fiber is scarce and high spectral efficiency is required. As an example, in situations where a data center operator is leasing fiber, embedded optical engines can reduce operational costs by maximizing the amount of data transmission over a single fiber pair to avoid the incremental costs associated with leasing a second fiber pair or trenching new fiber. The power consumption of today’s 800G coherent optical engines is also drastically improved, utilizing 89% less power per bit than similar engines 10 years prior.

Putting more lanes on the highway

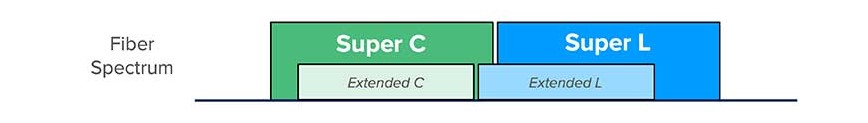

DWDM networks have traditionally used only C-band fiber spectrum. Out of necessity, webscale operators with hyperscale data centers were early adopters of C+L-band transmission on the same fiber. Just like adding automobile lanes to the autobahn in Germany or the interstate highway system in the U.S., expanding the usable spectrum on a fiber delivers more capacity. Hyperscale data centers with extraordinary capacity demands can double the amount of traffic per fiber pair by utilizing the latest embedded optical engines and an optical line system that supports transmission on both the C- and L-bands simultaneously. Although today’s extended C-band and the L-band each occupy 4.8 THz of adjacent spectrum, capacity gains per fiber pair have been dominated in recent years by more spectrally efficient coherent optical engines with advanced modulation schemes. As optical engine spectral efficiency gains diminish with each successive generation, a new approach is needed. With advancements in optical line system components like amplifiers and wavelength-selective switches, we can now cost-effectively increase the transmission spectrum from 4.8 THz to 6.1 THz in both the Super C and Super L-bands. For a small incremental line system infrastructure cost, we can realize 27% incremental spectrum and transmission capacity per fiber pair. Although still early, look for Super C and Super L DCI deployments to occur in the next few years.

Data center construction and the optical connectivity between them show no signs of abating. While still critical and growing, massive hyperscale data centers are giving way to an increasing number of smaller, modular, and diverse data centers. The changing data center landscape requires us to also rethink our approach to data center interconnect. DCI cannot remain a one-size-fits-all approach. Instead, we need a variety of sleds and chassis to fit all types of environments and enable us to have a low entry price, reduced power consumption, and a small footprint, while also easily scaling and evolving to meet future capacity demands. We need a combination of pluggable and embedded engines to match cost to capacity-reach performance. Finally, we can’t solve all our DCI challenges with faster/better optical engines. We also need to put more cars on the roadway if we want to serve more demand and maximize transmission per fiber pair. The symbiotic relationship between data centers and the DCI solutions that serve them continues.