Of the hundreds of thousands of gigabytes of data collected and stored within your mission critical facility, what factors are most likely to influence the value of a specific, key subset of data? What is meaningful and what is just noise?

DCIM VS. DCOM STRATEGIES FOR RELIABILITY

Most data center facility professionals focus on the design, implementation, system control, and monitoring of infrastructure. Our reliance on data center infrastructure management (DCIM), which focuses on the plant, property, and equipment (PP&E), has emphasized the idea that infrastructure is the critical element in predicting the reliability of a facility. This terminology fosters a “break-fix” mindset, when in reality the infrastructure is only as good as the people managing it.

Consider that at least 65% of downtime in mission critical facilities is the result of human error. DCIM systems may produce a quantity of information through their continuous monitoring and alerts, but operators must still possess situational awareness, which is the ability to interpret incoming information and, as a result, make the right decisions. DCIM is what we physically see in a mission critical facility, but DCOM (data center operations management) is what goes on below the surface to bolster what we see. With this in mind, is a focus on infrastructure really the best strategy for reliability?

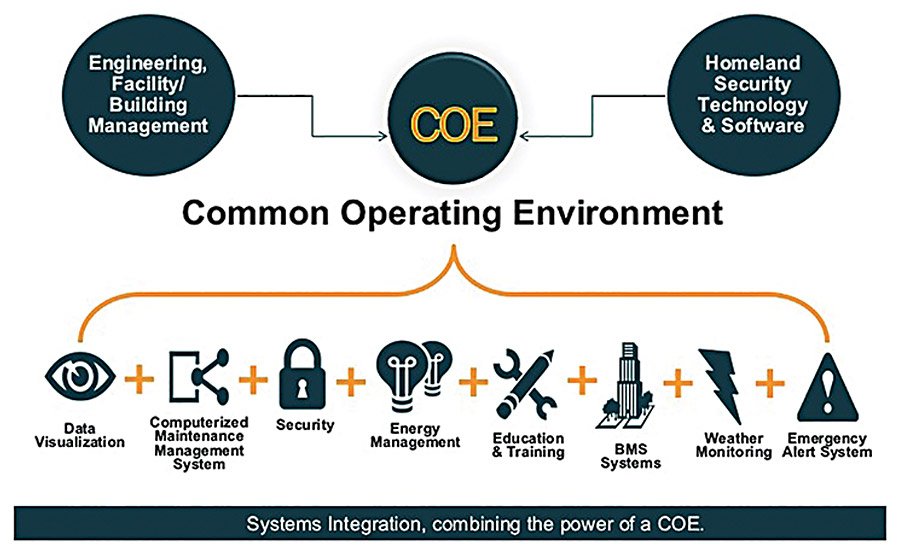

The operation of the PP&E cannot be viewed apart from the way in which we utilize the information produced by the myriad of related systems, including the building management system (BMS), the computerized maintenance management system (CMMS), the energy management system (EMS), and the security access system (SAS).

We must find a way to synergize the relationship between operations and these disparate systems other than requiring our operators to toggle back and forth between screens on systems and running around to tinker with the physical equipment in the facility. This involves updating processes and procedures affecting the entire enterprise. But the result means better decisions about predictive maintenance and proactive management of the facility in holistic terms. The only way to achieve this is through increasing the precision of predictive analysis by incorporating “human data.”

IN PURSUIT OF PRECISION FOR PREDICTIVE ANALYSIS

Real-time access to data is the immediate value brought to operating personnel by DCIM tools. The constant polling of all the systems is excellent in terms of mere convenience. With regard to bringing together the various equipment data, DCIM tools are incredibly helpful and absolutely necessary for even moderately-sized facilities. But at any given time, the majority of that information is not pertinent to the issues at hand, which inevitably involve operations. Addressing DCIM while ignoring DCOM is simply not precise enough.

The visual trending of data across time adds little value unless it can be leveraged to produce an actionable conclusion. Therefore, predictive analysis tools need not only be efficient in data collection, trending, and simple analysis, they must also enhance the user’s ability to make actionable decisions, thereby, preventing downtime or a loss of revenue. It is important that facility managers be able to view data-blocks of readings over time, as well as automatically generated rounds reports. Assessment and audit functionalities need to allow for trending and the creation of health indexes by facility. Management can then look across all of its facilities to benchmark practices and enhance decision-making. They can see where capacity may be an issue, where energy and water use is the highest, and where capital investment may soon be needed based on the equipment lifecycle assessments.

This intricacy has created a necessity for new dynamic tools and digital companions that can assist humans with all the operational and emergency tasks they face when operating in this environment.

WHAT IBM WATSON ANALYTICS HAS TO TELL US

Analytics adoption models for decision-making, even the simplest forms of analytics involving monitoring and reporting, have become commonplace in many businesses for several years now. More sophisticated methods for predictive and prescriptive analytics are routinely perceived by larger companies as an integral part of tactics for securing a competitive advantage in the marketplace. Watson is a particularly strong signifier of the need for transparency and collaboration between data procurement and business solutions, in essence, a shift for data’s role from enabling or enhancing business to becoming an instrumental, central component.

Since a trend analysis must start with a baseline, it is important to have a repository of data and documented readings to provide operators with information while they are performing maintenance, not afterwards when they return to the office.

Watson Analytics consists of a number of intuitive platforms for loading and analyzing data with the objective of improving the quality of this information as efficiently and as quickly as possible. What I am advocating for is the similar application of cognitive computing to the challenges of data analysis within a standardized approach to operations, service delivery, and maintenance while mitigating the risk associated with human error. Process control and comprehensive documentation, together, are part of this equation. Procedures are only effective when they form part of the process control, i.e., when employees can participate in intelligent ways in the loading and analyzing of data. Moreover, once procedures are actively being used, their value increases exponentially while the time investment decreases exponentially.

When functioning as designed, Watson Analytics combines natural language understanding with the ability to interpret and respond as an experienced professional might. This means identifying patterns and relationships between and within data sets without the need for a highly technical or complicated query. The goal is to move beyond statistics — including all that noisy embedded technology contained within almost every device found under a data center’s roof — and pinpoint with precision specific recommendations based on relative relevancy.

Almost any element can be tracked and its outputs measured, using supplier-issued RFID tags and bar codes. Some power and cooling systems can also be managed using remote communication systems. This development offers the potential for an unprecedented level of detail on capacity, usage, performance, peaks, and lulls, but it also presents a remarkable opportunity to optimize performance in anticipation of usage variations and other factors that impact performance. A tool for predictive and prescriptive analytics (like Watson) would ideally help operators make decisions rather than contribute to data overload, when they are faced with an onslaught of new embedded technology information. A worthy goal for the mission critical industry is to likewise optimize data set constraints and linguistic interpretation capabilities.

The advantage of a tool like Watson is that useful predictors can be viewed in a number of ways, from visualizations to decision trees to other kinds of datavized documentation. Watson’s “assemble” capabilities include dashboards and a series of info-graphic templates. This is about translating information into a visual format in a minimal number of steps. The ideal tool for the mission critical industry would save parameters and provide graphs of readings over time so an operator could analyze trends. Such a tool would enable the tracking and trending of information to become easier over time while reducing the overall time investment.

CATERING TO THE NEXT GENERATION OF FACILITY OPERATORS

Key to this discussion, Watson Analytics is equipped with the ability to improve the accuracy, quality, and precision of data sets and to do so quickly. Essential to this is the ability to provide instant feedback on the quality of the data sets. This capacity effectively functions as a passive teaching mechanism by training the user on how to best procure increasingly better results. A similar tactic for the mission critical industry would be to address demand process discipline and procedural compliance without operators even realizing it. This could be accomplished by requiring the documentation and validation of all processes on a daily basis but within the construct of a roadmap for operational functions. Such a strategy enables accountability by guiding employees through each process step-by-step and requiring input, including in the form of a question, into data fields. This model is less about clicking (menu commands) than about learning how to drill down to the essential information in a considered and intelligent way.

The next generation of engineers and operators is conditioned to learn differently from past generations. These individuals have been using technology-based tools as part of the current education system and, consequently, they will be accustomed to learning through continuous education in small increments, recording results as they go, and combining training with the actual maintenance and operation of a facility. They will be comfortable with technology tracking their progress.

INTEGRATING THE HUMAN FACTOR

The capacity of individual components and systems is not typically managed effectively and is a common cause of failures. Is it really a requirement to continuously review every bit of information from every piece of equipment? The answer is not so much about what data we should pull and when, but rather about what we do with the data once we collect it. How can we transform all of this data into useful information?