Liquid cooling (LC) has been used since the early mainframe days and to cool some supercomputers. More recently, air cooling became the predominant form of cooling for most computing systems. Over the past several years, however, many new LC technical developments and products have entered the market. This has been driven by several factors, such as the increased demand for greater power density, coupled with higher information technology (IT) performance for high-performance computing (HPC) and some hyper-scale computing, and the overall industry focus on energy efficiency.

While the thermal transfer effectiveness and energy efficiency of LC compared to air are well-known, deployments have been limited. However, this past year there have been many new developments, which makes it easier to implement and has increased interest in LC. Even the major server OEMs are now offering LC for some models. LC in the data center is steadily advancing⎯both in technology and market acceptance.

Nonetheless, there are many myths and misnomers about LC in the industry. To address this, The Green Grid (TGG) recently published the Liquid Cooling Technology Update white paper #70. This white paper provides a high-level overview of IT and facility considerations related to cooling, along with a guide to state-of-the-art LC technology. It is intended for chief technology officers and IT system architects, as well as data center designers, owners, and operators. The paper defines and clarifies LC terms, system boundaries, topologies, and heat transfer technologies (https://www.thegreengrid.org/en/resources/library-and-tools/442-WP).

Moreover, while the industry is well aware of the ASHRAE Thermal Guidelines for air-cooled IT, fewer are aware that the 2015 guidelines now also covers Liquid Cooling categories W1 to W5. The TGG paper refers to existing terminology and methodologies from the American Society of Heating, Refrigerating, and Air-Conditioning Engineers (ASHRAE) Technical Committee 9.9 Liquid Cooling Guidelines (2016). However, it also includes recently developed LC technologies that may not be covered by current ASHRAE publications.

The white paper discusses the findings of TGG Liquid Cooling workgroup, and the key benefits, limitations and both business and technology considerations regarding the adoption of LC IT equipment. It also reviews the factors to consider for new facilities, as well as when it may make sense to consider adding LC to accommodate very high-density applications in existing data centers.

DATA CENTER HYDROPHOBIA?

And while LC is not new in the data center, in this “modern” age, the majority of IT hardware is air cooled, and for most people LC has become an antiquated concept and in fact, to some, there is a fear of water data center hydrophobia.

Of course, the term LC has been used to market many cooling systems designed to provide so called “supplemental” cooling or “close coupled” cooling such as in-row, contained cabinet, rear-door, or overhead style cooling units. But while these systems are primarily aimed at dealing with high-density heat loads, all of these types of cooling units still are meant to support conventional air-cooled IT hardware. However, while these types of cooling units support higher heat loads for air-cooled servers, typically 15 to 30 kW per rack, nonetheless there are some applications such as HPC that demands much higher power densities. As power demands continue to increase in the zettabyte era, LC can help meet the energy efficiency and performance challenges of high-performance compute and high-density environment.

While the thermal transfer effectiveness and energy efficiency of LC compared to air are well-known, deployments have been limited. However, this past year there have been many new developments, which makes it easier to implement and has increased interest in LC. Even the major server OEMs are now offering LC for some models. LC in the data center is steadily advancing both in technology and market acceptance.

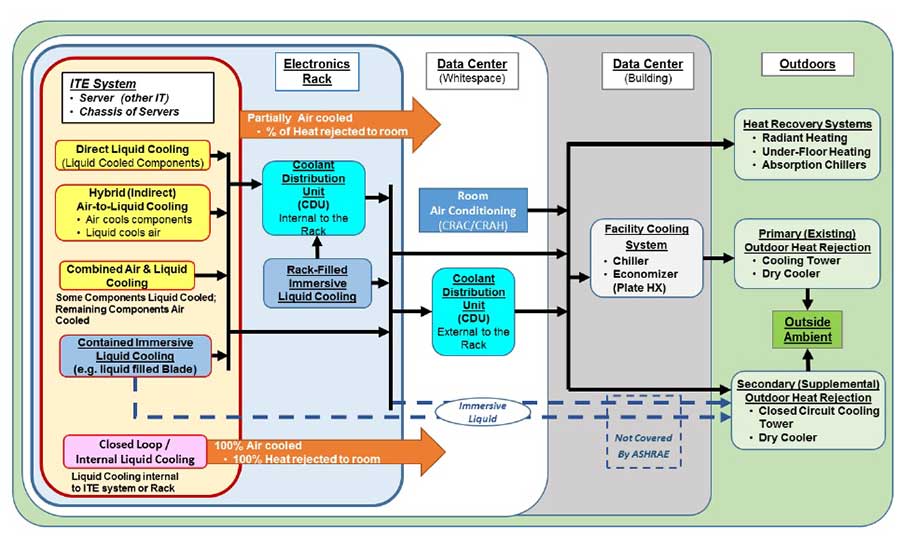

The paper is vendor neutral and covers a wide variety of LC technologies. One of the important aspects of the LC white paper was defining LC system boundaries and technologies. Figure 1 depicts five boundaries, as well as multiple possible heat transfer paths. It should be noted that the arrows denote the (liquid) flow of heat from the ITE system to the outside ambient air along any singular path. This is a key element which established a framework for IT vendors and facility designers and defined points of interfacing IT equipment and heat rejection systems.

In addition, cooling system efficiency for air-cooled IT equipment has vastly improved, especially over the last few years since the wider adoption of “free cooling.” As a result, PUEs for new designs have dropped substantially to 1.1 or less for some internet giants’ sites that utilize direct airside free cooling and even 1.3 for some not using outside air. Nonetheless, even as we approach the “perfect” PUE of 1.0, the fact remains that in most cases virtually all the energy used by the IT equipment still becomes waste heat. While there are some exceptions, such as using the warm air to heat administrative office spaces within the building, it is very difficult to make large scale use of the “low grade” heat from the IT exhaust air (typically 90° to 110°F). Even using rivers, lakes, or the ocean to absorb the heat, does not change the fact that the energy is wasted and heat is added to our environment.

Moreover, in our quest for energy efficiency, we tend to forget that even the lowest PUE overlooks the fan energy within the IT equipment itself, which can range from 2% to 5% of the actual power delivered of the IT components in most cases. However, it can be even higher: 5% to 20% for 1U servers with eight to 10 small high-speed fans operating at high intake temperatures. Of course, the IT fan energy is in addition to the fan energy for the facility equipment (free cooling or conventional).

So back to LC, let’s look at a brief summary of the five classes in the ASHRAE Liquid Cooling Guidelines and the developments in the IT equipment and cooling systems that use LC. Classes W1-W3 which covers fluid temperatures of 35° to 90°F (17° to 32°C) are generally intended for incorporation into more tradition data center cooling systems. These are meant to be integrated with chilled water or condenser water systems, or at the higher ranges of W3 primarily using cooling towers. This can involve the use of fluid-to-fluid heat exchangers and IT equipment that can accept LC or as part of air-to-fluid heat exchangers within an IT cabinet. It is the W4 and W5 classes that really expand the ranges and are the basis for so called “warm water” and “hot water” cooling systems.

HOT WATER COOLING?

While “hot water cooling” may seem like an oxymoron or at least a strange statement, in fact the Intel Xeon CPU is designed to operate at up to 170°F (at the heat transfer surface maximum case temperature). This allows for the CPU chip to be “cooled” by “warm water” (ASHRAE Class W4: 95°-113°F) or even “hot water” (W5 above 113°F). Moreover, the highest class W5, which is specifically focused on energy recovery and reuse of the waste heat energy, and is a much sought after long term goal of the data center industry in general and for HPC/supercomputing in particular. In fact, currently IBM, HP, and Cray have liquid cooled mainframes and supercomputers

In addition, immersion cooling of IT hardware has been on the periphery for the earlier in the decade, originally introduced by Green Revolution Cooling, which involved submerging the IT equipment into a non-conductive dielectric fluid (such as mineral oil), which effectively absorbs the heat from all components. One implementation (which in 2010 I described as a deep-fryer for servers), involved using standard 1U servers that had been modified by removing the fans and modifying or removing the hard-drives. Technically speaking, from a heat transfer perspective it is very effective, however as you can imagine, this involves a variety of issues from a practical viewpoint.

Since then, many vendors, some smaller startups, as well the major manufacturers, have made liquid cooled servers more convenient to install and service. In some cases, the server modules are sealed and can be inserted or removed as quickly and easily as any blade server modules.

HITTING THE BOILING POINT

It should be noted that while most people associate LC with water, in fact many of the current products use a variety of “engineered” fluids such as synthetic mineral oil and “phase change” fluids, which have excellent thermal transfer properties but are non-conductive. So while 113°F (or even 170°F at the chip) is not at the boiling point of water, phase change fluids “boil” at lower temperatures. As an alternative to the mineral oil immersion system based on another more recent design was developed in conjunction with Intel using 3M™ Novec™ Engineered Fluid (a dielectric fluid with a low boiling point of approximately 120°F). One of the primary advantages is the improved heat transfer based on the phase change and also that the condensing coils can operate at higher temperatures. This allows the final external heat rejection system to avoid the usage of water for evaporative cooling. It also provides an expanded range of heat reuse options due to the higher water temperatures.

Some vendors have produce closed loop systems that combine two or more isolated fluids to maximize thermal transfer and avoid water inside the IT hardware, while others, such as Dell’s third generation of liquid cooled Triton Server pumps water directly into the server blade. (Video link: https://www.youtube.com/watch?v=pNUv3ekSDOU) Yet, other vendors have developed system racks that use sealed server modules which contain dialectic fluids, and have “dripless” connectors that snap into a rack backplane which then circulates fluid though isolated passageways in the server module case.

Going a step further to alleviate hydrophobia, HP developed “Dry Disconnect Servers” that transfer heat to warm water cooled thermal bus bars in the chassis of their new Apollo line of supercomputers, thus avoiding fluid contact with the server modules, and perhaps alleviating the hydrophobic concerns of potential customers.

As further proof that LC is ready for the mainstream data center, at the March 2017 DataCenterDynamics Enterprise Conference, held in New York City, STULZ, a major cooling equipment manufacturer was exhibiting a cabinet (incorporating technology developed by CoolIT) that is specifically designed for liquid cooled servers.

THE BOTTOM LINE

So is there a more mainstream future for LC in the enterprise data center? Its primary driver is support for much higher heat densities while using much less energy to remove the heat. Cost is also a factor, and many users have the perception that liquid cooled servers are more expensive than air-cooled servers. While this is somewhat true at the moment, it is primarily due to lower volume of liquid cooled servers and associated heat transfer systems. As pilot projects validate IT performance increases and energy efficiency benefits of LC, it will increase adoption, which will lower prices. This will ultimately make liquid cooled systems as cost effective as air-cooled IT equipment.

As the summer approaches and we begin to use more energy for our chillers and CRACs as we endeavor to keep our conventional servers “cool,” try to remember that the real future efficiency goal is not only to reduce or eliminate our dependence on mechanical cooling. Even if your data center manages to go 100% “free cooling,” we need to consider the long-term importance of how to recover and reuse the energy used by the IT equipment rather than just continuing to dump the “waste” heat into our environment. So what is better than “free cooling?” Recently, in Stockholm, a government-backed initiative offers space, power, networking, and cooling-as-a-service to data centers and it will allow operators to sell their waste heat. So if you are not quite ready to jump into LC immediately, look a little ahead and download the TGG white paper, which is now free and in the spirit of online shopping includes “free shipping.”