The initial capital cost of a data center facility runs anywhere from $10 to $30 million per megawatt of IT capacity. Despite these high costs, the average data center strands between 25% to 40% of its IT loading capacity through inefficient data-center equipment layout and management. As such, substantial financial losses are routinely incurred through lost IT loading capacity. Put another way: in practice, four typical data centers are needed to provide what could be provided by three data centers with more optimal design.

This case study shows how a virtual facility approach that leverages proactive mathematical simulation of data-center thermodynamic properties can minimize lost capacity far more effectively than a traditional iterative, multi-stage data center deployment methodology. This paper’s economic analysis shows the extent to which the virtual facility approach allows data center operators to realize original data-center operational intent and provide for full intended lifespan of the data center.

Background

Full utilization of IT loading capacity requires an understanding of the interrelationships between a data-center’s physical layout and its power and cooling systems. For example, a lack of physical space might cause servers to be positioned in an arrangement that is non-optimal from a cooling perspective. Indeed, cooling is the most challenging element to control, as it is the least visible and least intuitive. Complicating matters, airflow management “best practices” are simply rules of thumb that can have unintended consequences if not applied holistically. The virtual facility approach provides the basis for this holistic methodology when modifying the data center.

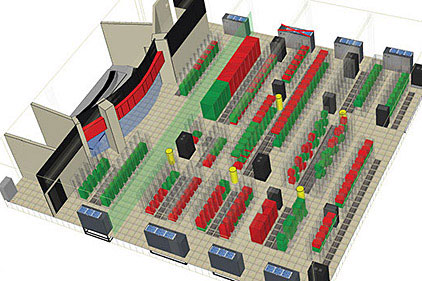

The Virtual Facility approach is a Predictive Modeling solution that utilizes a three-dimensional, computer model of the physical data center as the central database and communication platform. The Virtual Facility integrates space, power and cooling management by combining 3D modeling, computational fluid dynamics (CFD) analysis and power system modeling in a single platform. These systems simulate data center performance and provide 3-D visualizations of IT resilience and data center loading capacity. 6SigmaFM is the Predictive Modeling product from Future Facilities.

Methodology

In this case study, the data center of a large American insurance company is analyzed to compare the relative merits of using a straightforward CFD analysis system to isolate and correct thermal issues as they occur vs. using a comprehensive predictive DCIM solution to predict and avoid thermal problems before IT deployments and modifications are made.

Three successive IT deployment modifications are discussed in the context of the insurance provider’s data center. Methodologies for leveraging the predictive power of the 6SigmaDC suite of software to maximize IT loading capacity and optimize efficiency are discussed.

The Data Center

The data center considered here is an 8,400-sq-ft facility with a maximum electrical capacity of 700 kW available for IT equipment and 840 kW available for cooling with N+1 redundancy. 4kW cabinets are arranged in a hot-aisle/cold-aisle (HACA) configuration. Cooling comes from direct expansion (DX) units with a minimum supply temperature of 52°F. The data center’s IT equipment generally has a maximum inlet temperature of 90°F.

Iterative Appoach

This data center was rolled out in three distinct deployments. All three deployments were planned at once, and CFD analysis was used only for the initial planning. The intent of deployments was to maximize the data-center IT load and minimize energy consumption. According to initial plans, the data center lifespan was to be ten years – in other words, the data center facility would be sufficient to supply the anticipated growth in demand for computational services for a decade.

First deployment. Upon the first deployment of IT equipment in the facility, some parameters met expectations. The facility was at only 25% of maximum heat load, and no rack consumed more than 2.59 kW of electricity (far below the recommended 4kW limit). Despite this, equipment was at risk because certain network switches were ejecting waste heat sideways, increasing rack inlet temperature (RIT) to borderline levels.

To mitigate the problem, equipment placement within the racks was staggered to lower RIT. Keeping the equipment within the same racks reduced server and switch downtime, but the reduced equipment density caused by the non-optimal placement represented a deviation from operational intent, resulting in stranded capacity.

Second deployment. During the second wave of IT equipment additions, various servers and switches were introduced (again, in a staggered configuration) and the heat load of the data center increased to 45% of capacity — within the designers’ expectations. However, contrary to expectations, equipment at the top of many racks was overheating due to waste heat recirculation. The recirculation problems resulted from a failure to consider not only equipment placement, but also equipment power density. Moving certain devices to the bottom of each rack mitigated the problem, but as with the first deployment, more capacity was stranded.

Third deployment. During the final IT deployment, the IT load in kW reached 65% of capacity. Afterward, internal circulation is observed among racks two rows apart, and blanking panels are suggested as a possible fix. Counter-intuitively, however, the blanking panels actually increase RIT and they are removed. As a result, no additional equipment can be safely added, and roughly a third of the data center’s capacity is left unusable.

Virtual Facility Appoach

Some of the problems encountered in a trial-and-error approach to data-center design can be avoided through the virtual facility approach. The virtual facility approach involves leveraging a continuous 3-D mathematical representation of the data-center’s thermal properties to anticipate future problems before they happen.

As stated above, after the third deployment of IT equipment, the data center was operating at 65% of maximum heat load. 354 kW was being removed by the air conditioners (ACU) with total capacity of 528 kW in an N+1 configuration. Of the 354 kW of heat load, 286 kW came specifically from IT equipment. The highest cabinet load was 7.145 kW, and the highest inlet temperature was 86.5°F. Cooling airflow stood at 86,615 cubic-feet per minute (cfm), and airflow through the grills was 79.5% of maximum.

6SigmaRoom was used to calibrate the initial CFD model to reflect the actual airflow patterns. CFD analysis from 6SigmaRoom indicated that strategic placement of blanking plates would decrease inlet temperatures sufficiently to allow an increase of heat load to 92% of capacity without risk of equipment damage. The end result is a potential increase in the useful life of the data center.

Conclusion

Because airflow patterns become less predictable as the IT configuration builds over time, it is recommended that CFD be used continuously and as a component of IT operations, and not just during the initial design phase of a data center.

The virtual facility approach bridges the gap between IT and facilities so that personnel who are experts with servers and switches can communicate with personnel who are experts in HVAC and electrical systems. Systems like 6SigmaRoom validate mechanical layout and IT configuration, while 6SigmaFM provides predictive DCIM, capacity protection, and workflow management. Finally, data center predictive CFD modeling capabilities included in the 6SigmaDC suite has the power to detect potential problems relating from poor airflow management, floor-tile layout, and equipment load distribution.